How I (Currently) Map Our AI Tool Stack for Dev, Research, Production and Non-Tech

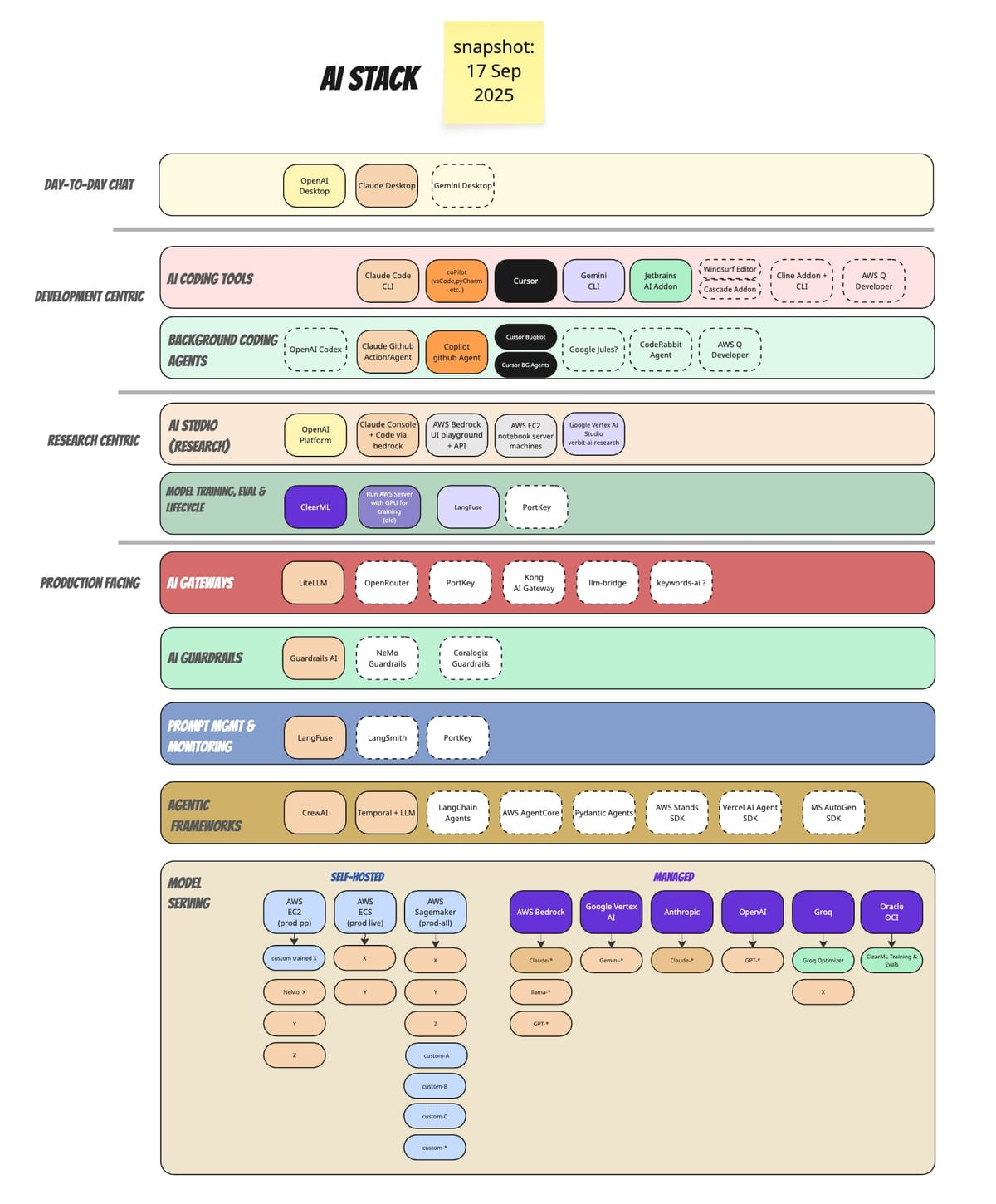

TLDR; see main image of this post, and this is the structure:

AI Stack

│

├── Day-to-Day Chat

│

├── Development Centric

│ ├── AI Coding Tools

│ └── Background Coding Agents

│

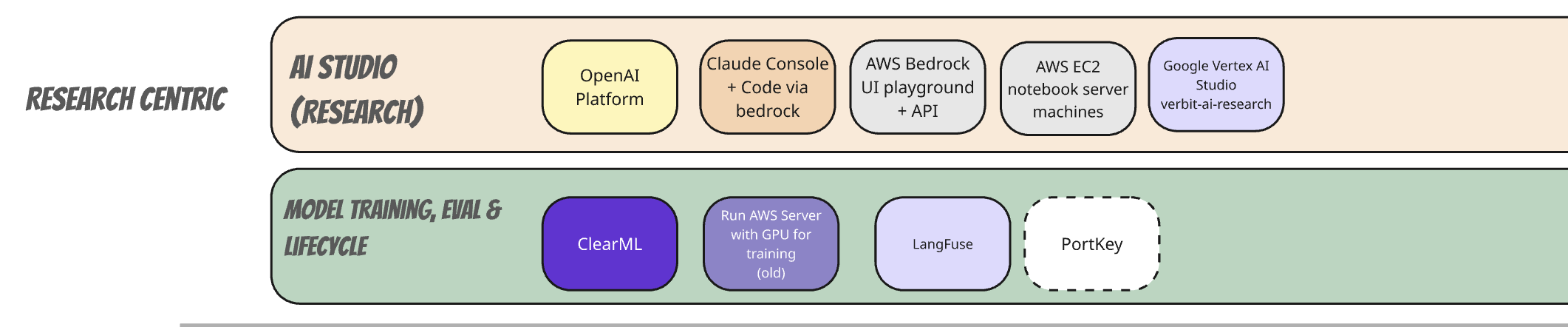

├── Research Centric

│ ├── AI Studio (Research)

│ └── Model Training, Eval & Lifecycle

│

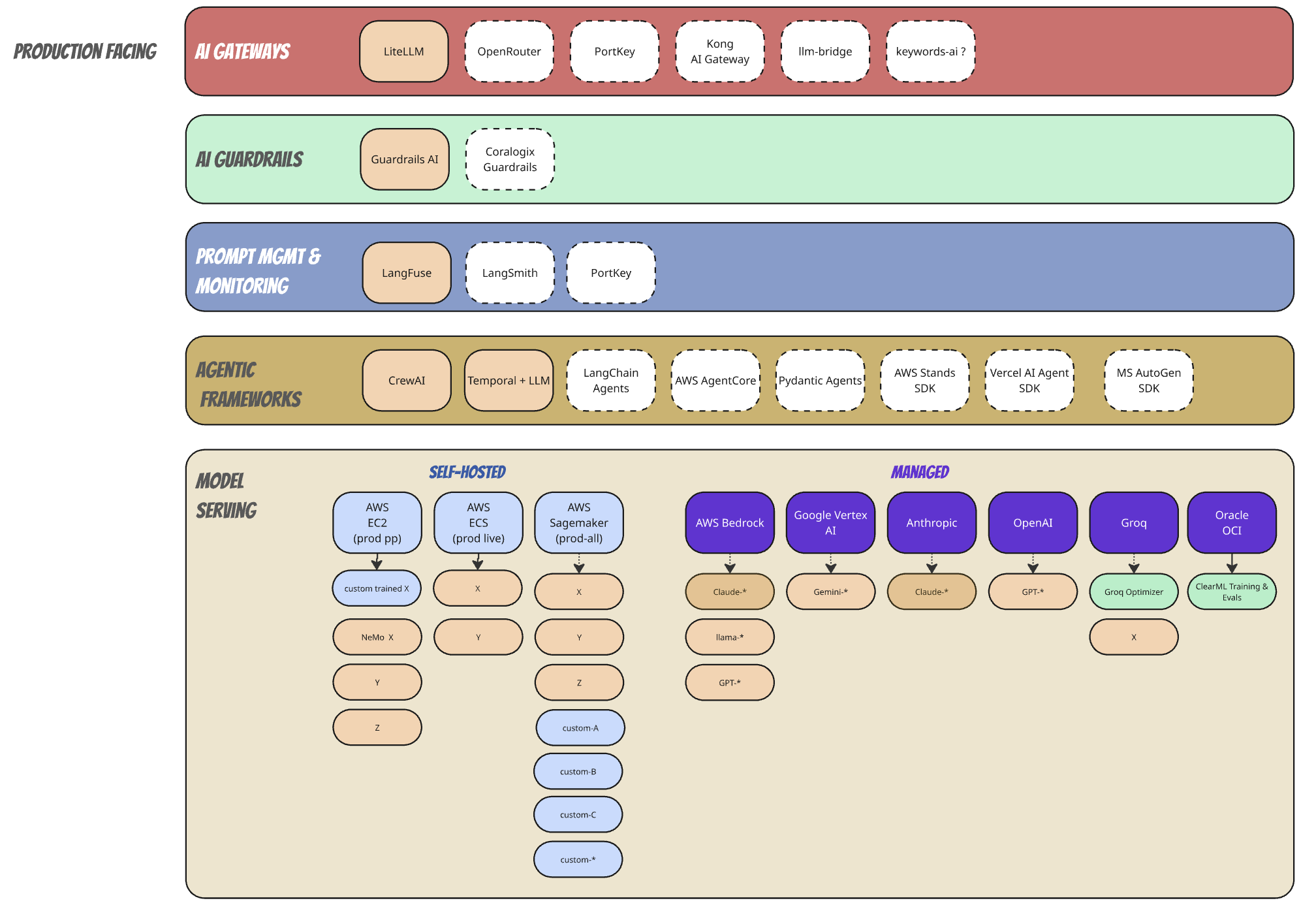

├── Production Facing

│ ├── AI Gateways

│ ├── AI Guardrails

│ ├── Prompt Mgmt & Monitoring

│ └── Agentic Frameworks

│

└── Model Serving

├── Self-Hosted

└── ManagedI like zooming out of things. And at some point I realized we don't have a good bird's eye view of all of the AI tools and frameworks we use every day at work. In fact, we didn't even have proper categories for how and where we use each tool. It's all a big mess and one excel file of expenses only gets you so far. Especially if you want to present this stuff to other people, explain why we need to focus on a specific category of tooling, or how our AI adoption is going in various "verticals" in the company, and for what purposes.

This might change next month (probably will) but here are the current categories and sub categories we ended up using for now. I'm sure there are more you can think of so please drop me a line and tell me.

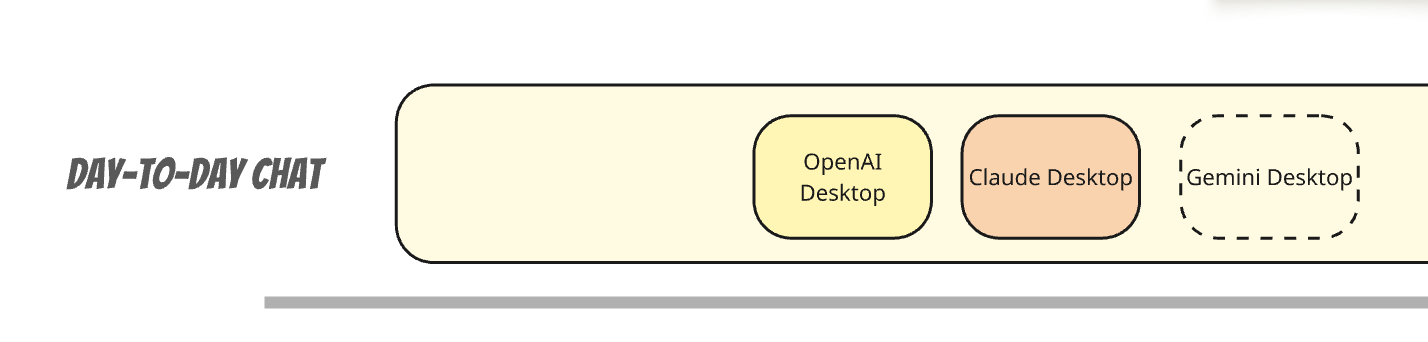

Day to Day Chat AI Tools

These "client" tools are used both by techies and non techies at the company for various purposes. We usually use the "teams" version of things, and make sure data sharing for training is off of course since we need to be HIPAA compliant. (we have signed BAAs like this one with our vendors)

- Desktop AI Chat Client: OpenAI ChatGPT Teams

- Desktop AI Chat Client: Claude Teams

- Potential to check in the future: Gemini Chat in browser or desktop

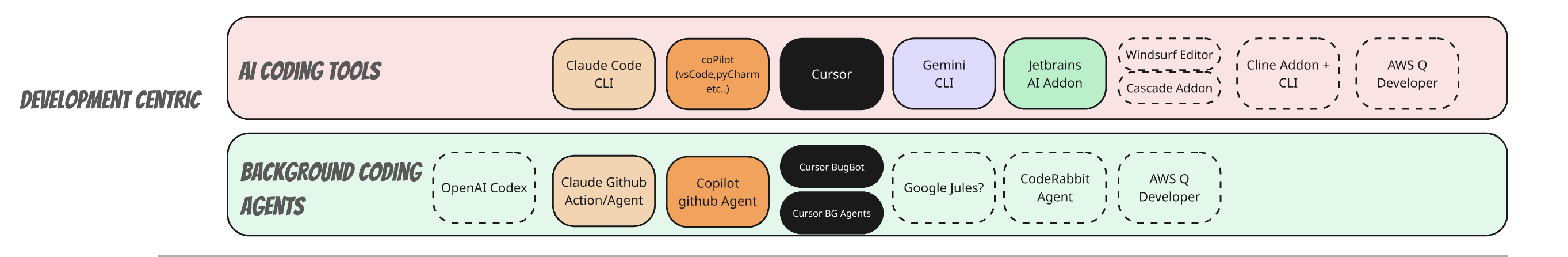

Developer Centric AI Tools

These tools are used day-to-day by developers either directly (in the IDE or CLI) or indirectly (fire and forget background agents) or passively (background bug or review agents)

AI Coding Tools for Developers

Every developer gets to choose ONE of the following licenses on a monthly basis for day to day dev work:

- CLI: Claude Code (via anthropic console)

- CLI: Gemini CLI (via vertex studio)

- IDE: Cursor

- IDE Addon: Jetbrains AI Addon (we have a lot of people, mainly research that don't want to leave the pycharm comfort for cursor. the AI addon is acceptable as far as I'm concerned, even though it doesn't offer the amount of features cursor has, but better something than nothing)

- IDE Addon: Co-Pilot - Since it supports most IDEs it's an option, though I feel it misses a lot of nice features.

- TBD : Windsurf, Cascade IDE/Addon, Cline, AWS Q Developer

Change your mind (and license) every month

Because things in the AI world are moving so fast, we're not setting up yearly licenses for these tools, but we renew each month. Monthly subs allow our developers and researchers to "change their mind" or for our recommendations to change on a monthly basis and we can cancel and switch across tools without paying for something people aren't using anymore. We're paying the premium so we can keep up with things.

Developer Centric AI Background Agents

I initially thought that fire-and-forget background agents will play a bigger role for me and others as a developer in our org, but I still like doing things in a more "eyes-on" approach. Sending off a BG agent to do some real bug fix or adding tests and then reviewing a huge PR with all the back and forth it will surely create, will take the same if not more time , and parallelizing this work for me or others might just create a bigger bottlenecks of PRs to review of AI slop that should have been prevented as it was created, not after the fact.

That said, passive background agents such as Cursor BugBot have been very useful. yes they do have some false-positives but they find real bugs and it really helps to find real stuff early.

- Claude github Actions: We have been experimenting with this and it seems pretty cool as a way to pay less for cursor bug bot (which is $40 per developer per month on top of the regular cursor licenses - sheesh!). It works really well, but if you don't have github enterprise, you need to install and maintain it per repo , so it's all about scale of team and repos)

- Cursor BugBot: A very professional product. Produces good results. Very expensive in my opinion, and needs more justification when you can install claude github actions and pay per token. Takes less messing around than github actions, and nice dashboards.

- CoPilot github agent: Nice, but the results were not as good as the first two options here. Lots of noise and false positives, but the Developer experience is nice and simple. Not justifying the cost in my opinion. (this was tested 6 months ago, so things could have changed dramatically meanwhile. I should try it again!)

- TBD: OpenAI Codex Agent, CodeRabbit, Google Jules, AWS Q Developer

Research Centric AI Tools

Our researchers use a combination of tools for day to day research and for managing the models and algorithms they end up creating and optimizing.

AI Studio for Researchers

These are all the various "console" and "playground" tools from Anthropic, OpenAI , Vertex, Bedrock and Vertex. Each one is being used for testing specific models with specific prompt versions.

- AI Studios: OpenAI Platform, Anthropic Console (now renamed to Claude Console), Google Vertex AI Studio (since we also use Gemini models), and AWS Bedrock Playground

Model Training, Eval and Lifecycle tools for researchers

We originally used manually managed EC2 GPU based instances with scripts to do most of the training, and since moved to using ClearML for model eval and promotion, however, we are slowly integrating Langfuse for prompt management and monitoring. We're still scared of putting it all in one place.

Production Facing AI Stack

I keep changing (mostly adding) categories here, as things are slowly splitting into patterns.

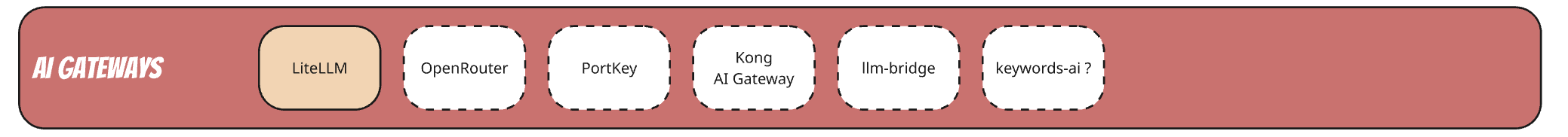

AI Gateways for Production LLM Use

AI gateways help centralize all the LLM related API calls into one central place in our infrastructure instead of having multiple services in our infrastructure manage their own API keys, quotas, rate limits, caching, security, model routing and more.

They also help with centralized monitoring, applying AI guardrails across the entire infrastructure or product line, cost & budget management, and changing underlying models and infrastructure relatively simply without interrupting dependent services.

AI gateways basically offer all the benefits of creating a single-responsibility micro-service that is internally managed instead of creating multiple sprawling dependencies and duplicate code across our product line and services.

There are also some disadvantages to them, for example, a centralized AI gateway for an LLM must expose some centralized, generic API that will support all of the use cases clients need. If there are feature gaps between the that AI API exposes vs specific features that might exist only for specific LLM services, say, Gemini API, but not in OpenAI API, then you either must find a gateway that supports this new API, or have an API that reduces to the lowest common denominator but also ask your clients to use the 3rd party API directly, which defeats the purposes of using an AI gateway.

Anyway, we're still exploring how to solve this problem in a maintainable and sustainable way, I'll write here about our efforts soon.

- We're currently starting to use self-hosted LiteLLM, which seems to offer great value and many features out of the box. The biggest contender for us is (maybe self-hosted) PortKey since it offers lots of extra enterprise-y features which would reduce the need for LangFuse , for example.

- TBD: PortKey, OpenRouter, Kong AI Gateway, llm-bridge (seems a bit too early in its lifecycle?)

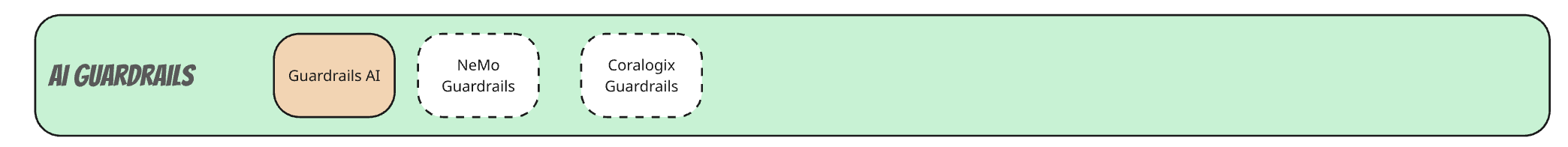

AI Guardrails for production use

We're still early in our journey for AI guardrails, so we have some custom internal code and tools in our services today, but all created in-house. Still early to tell, but GuardRails.AI seems to be the strongest contender for us to move to right now and we'll be starting integration soon as a POC.

- TBD: NeMo Guardrails, Coralogix Guardrails, others?

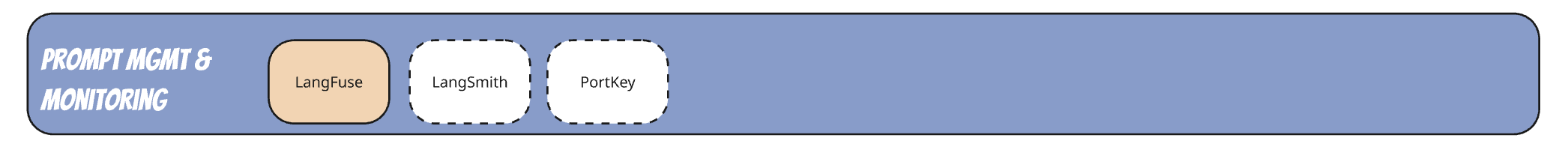

Prompt Management & Monitoring

We started, like others with hardcoded prompts, later configurable via JSON, later database oriented, and eventually started looking at LangFuse for prompt management. Currently in the early phases of testing it out in our non prod environments. Langfuse also provides powerful monitoring and observability for our prompts which is pretty nice.

Allowing changing or comparing the results of different prompts is pretty powerful, as well as separating the text itself from where it is used allows for better maintenance over time (single responsibility principle).

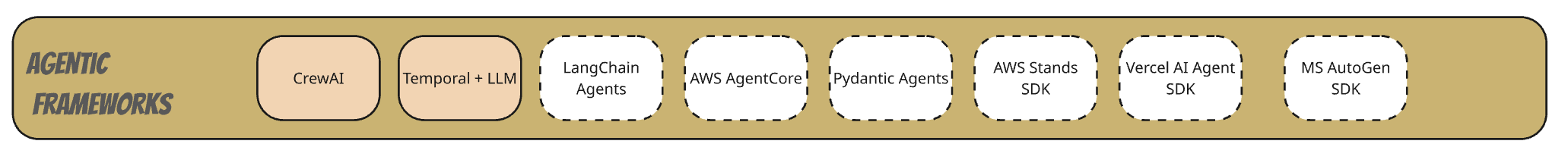

Agentic Frameworks for AI Production use

Other than using pure LLM relatively-deterministic workflows, we're experimenting with both creating our own internal agentic loop for various workflows using Temporal workflows with Claude Code, as well as using tools like CrewAI.

- TBD: Langchain Agents, AWS AgentCore, Pydantic Agents, AWS Strands SDK, Vercel AI SDK, Microsoft AutoGen SDK

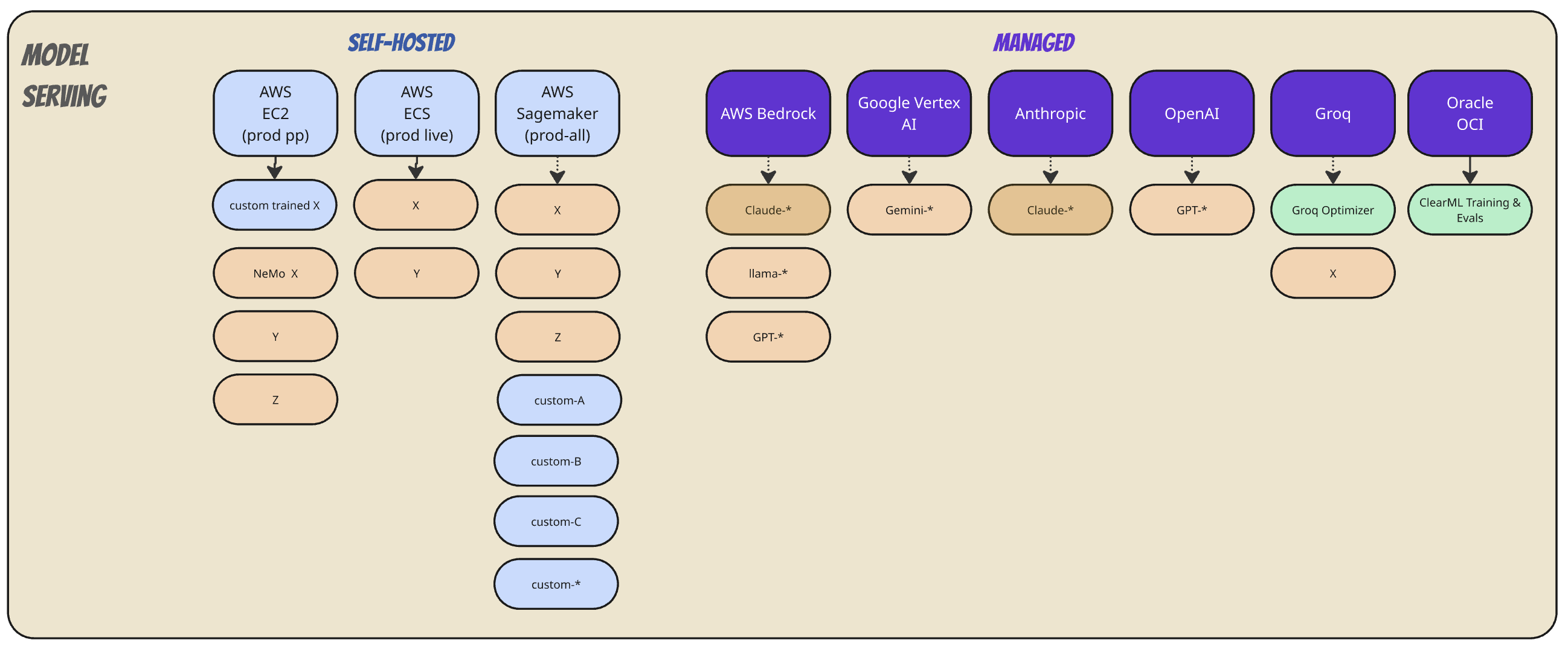

Model Serving

We serve both LLM based and NLP based ML models, with a combination of both self-hosted and managed services.

Self Hosted ML Model Service

We used to self host directly on EC2, then moved to AWS ECS (we're not K8s centric yet), and slowly moving into hosting any custom or 3rd party models on AWS SageMaker endpoints. We manage this deployment and syncing via ClearML and CI jobs.

Managed ML Model Service

We use a host of 3rd party managed services for other models, namely LLMs like Claude GPT and Gemini, but also use a combination of Bedrock hosted models from the same companies for the purposes of multi-region compliance such as EU regions with HIPAA and GDPR compliance.

- AWS Bedrock: To host compliant region specific models

- Google Vertex AI : for Gemini models (both API and endpoints in EU etc.

- Anthropic: Directly console access for research and APIs when we need the latest feature, or for routing balance

- OpenAI: Same as Claude

- Groq: for optimized access to some specific models

- Oracle OCI: For hosting models for training and evals before getting into prod.

Summary

This is a rather simplistic and always changing map of what categories we use and some of the services we use. It keeps changing and I'd love to hear how you map your own stuff, if you have any other categories I should include. Send me a note.